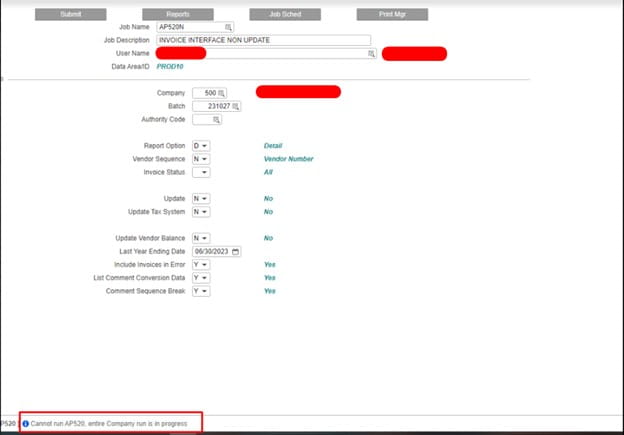

In Lawson you may run into the following error when a user is trying to change or run a job:

“Cannot run AP520, entire Company run is in progress”

The error can be found at the bottom of your screen (see below screenshot in red box)

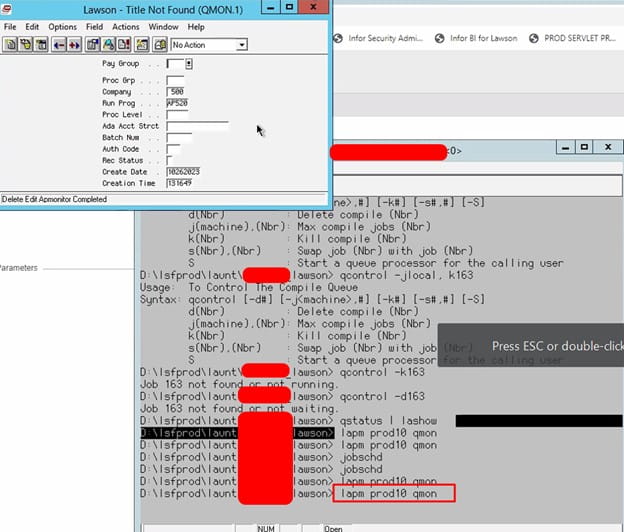

To resolve this, open and then log in to Lawson Interface Desktop (LID):

Type the following: lapm <enter_your_productline> qmon and press enter

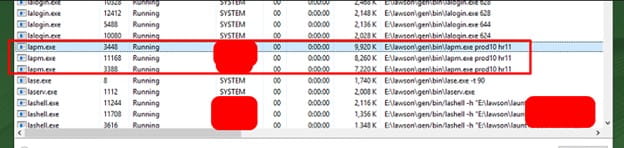

Next. find the AP record and delete it. The error should be fixed and disappear. See if the user can now change/run the job. Refer to the screenshots below for a visual guide.