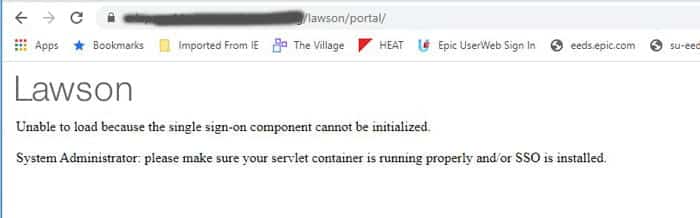

Recently we ran into the dreaded “Unable to load” error message in Portal, and could not find any indication of why Portal couldn’t load. The most we could see through a Fiddler trace is that the sso.js file was getting a 500 error. There was nothing logged anywhere. Not on the WebSphere node, application server, nothing in ios, security, event viewer. Nothing! We figured WebSphere had to be the culprit, because sso.js is wrapped up in an ear file in WAS_HOME, and everything on the Portal side was working until it tried to hit that script. So, we started digging into WebSphere and figured out that one of the certs was expired on the Node. For some reason, a custom wildcard cert had been used for the WebSphere install on the node only. We opted to switch it to use the default self-signed cert, which was not expired, and was the solution that worked best for us. Another option would, of course, be to upload a new wildcard cert and switch the old one to the new one.

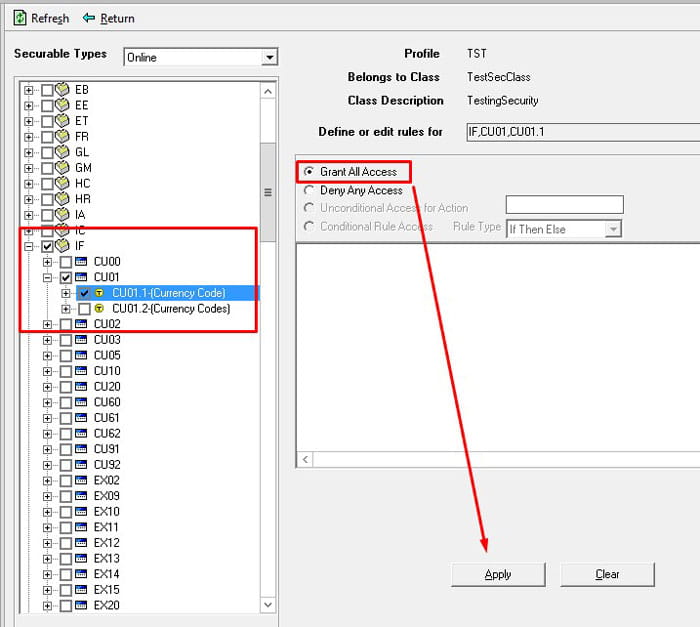

To switch a cert in WebSphere, go to Security > SSL certificate and key management > Key stores and certificates. Select the NodeDefaultKeyStore of the node you want to change, and under additional properties on the right, select “Personal certificates”. Click your “old” cert and select “Replace”. Select the “new” certificate that is replacing the old one. Check the “Delete old Certificate after replacement” box.

Bounce WebSphere services, or better yet, reboot. And you should be good to go!